AlphaEvolve: GDM's AI Sidekick That's Redefining Discovery

From Crunching Code to Cracking Theorems: Human-AI Collaboration at It's Best!

AlphaEvolve, Google DeepMind’s evolutionary coding agent, is turning large language models (LLMs) into tireless research partners.

Launched in May 2025, it started by optimizing Google’s data centers and hardware. By October, it is “proving” the unbreakable limits in theoretical computer science - the dawn of AI-human tag teams tackling the universe’s toughest puzzles? p.s. I consistently advocate for this HITL element.

For those who want a bit of history on GDM and AlphaEvolve’s long journey here, I have the following 3 posts:

The Self-Forging Mind: How AI is Learning to Evolve Itself Towards AGI

The whispers of Artificial General Intelligence (AGI) are growing louder, fueled by recent, breathtaking advancements in AI's ability to not just learn but “evolve itself.”

AlphaEvolve: The AI System That Upgrades Its Own Universe – But Has It Upgraded Itself?

Imagine an artificial intelligence that doesn't just retrieve information or follow instructions, but actively ventures into the unknown to discover novel solutions—solutions to scientific puzzles that have perplexed researchers for decades, or new ways to make th…

The Spark: How AlphaEvolve Was Born

Unveiled in the white paper “AlphaEvolve: A coding agent for scientific and algorithmic discovery”, this system harnesses the raw creativity of LLMs like Gemini Flash and Pro, but supercharges them with an evolutionary feedback loop. No more isolated code guesses—AlphaEvolve builds entire codebases iteratively, verifying each step to ensure they’re not just clever, but deployable. The result? A tool that bridges the gap between “what if?” brainstorming and “it works!” reality. As DeepMind’s blog puts it, AlphaEvolve “goes beyond single function discovery to evolve entire codebases and develop much more complex algorithms.”

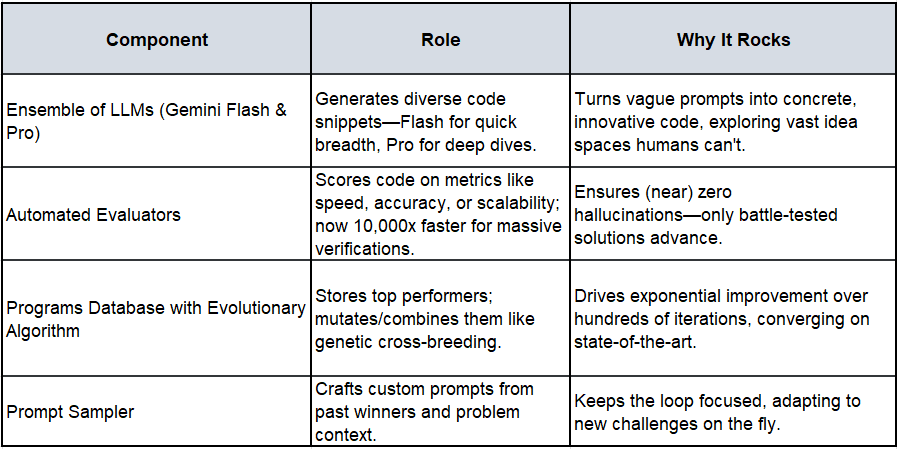

Peering Inside: The Building Blocks of AlphaEvolve

At its core, AlphaEvolve is a symphony of components working in harmony. Think of it as a digital Darwinian lab: ideas are born, tested, and bred for survival. Here’s a breakdown:

Key Components and Their Roles

Ensemble of LLMs (Gemini Flash and Gemini Pro): Acts as the creative engine. Gemini Flash explores a broad range of ideas quickly for diversity, while Gemini Pro dives deep for refined, insightful suggestions. Together, they generate code implementing algorithmic solutions based on prompts.

Automated Evaluators: The verification backbone. These run, test, and score generated programs against objective metrics (e.g., accuracy, efficiency, or functional correctness), ensuring outputs are practical and error-free.

Programs Database with Evolutionary Algorithm: The selection mechanism. It stores all generated programs and uses evolutionary principles (e.g., fitness-based selection) to pick the most promising ones for inclusion in future prompts, driving iterative improvement.

Prompt Sampler: The orchestrator. It assembles tailored prompts from selected programs and problem contexts to guide the LLMs in each cycle.

How It Works (Core Feedback Loop)

The system operates in a closed loop:

The prompt sampler crafts inputs drawing from high-performing prior programs.

LLMs generate new code variants.

Evaluators score them automatically.

Top performers enter the database, where the evolutionary algorithm mutates/combines them for the next round. This loop amplifies LLM strengths (idea generation) while mitigating weaknesses (hallucinations) through rigorous, automated feedback—often running for hundreds of iterations.

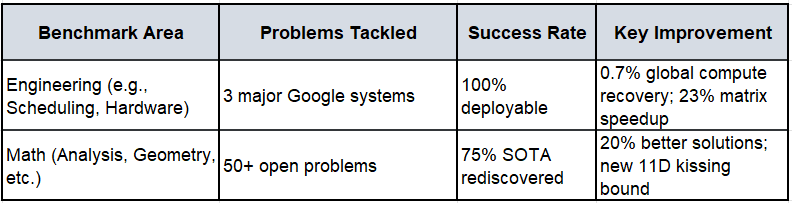

Practical Problems Solved in the Original Paper

The original work focused on real-world engineering and scientific challenges, demonstrating AlphaEvolve’s ability to deliver deployable improvements:

Data Center Scheduling: Evolved a heuristic for Google’s Borg orchestrator, recovering 0.7% of worldwide compute resources on average (sustained for over a year).

Hardware Design: Rewrote Verilog code for a matrix multiplication circuit in an upcoming Tensor Processing Unit (TPU), passing full verification suites and enabling integration.

AI Training and Inference Optimization:

Discovered a 23% faster matrix multiplication algorithm for 4x4 complex-valued matrices (using 48 scalar multiplications, beating Strassen’s 1969 method), cutting Gemini training time by 1%.

Optimized GPU instructions for the FlashAttention kernel, yielding up to 32.5% speedups in Transformer-based models.

Mathematical Discovery: Tackled open problems in geometry (e.g., new lower bound for the kissing number in 11 dimensions with 593 spheres) and rediscovered state-of-the-art solutions in 75% of 50+ benchmarks across math areas like analysis, combinatorics, and number theory, improving 20% of them.

These applications highlighted AlphaEvolve’s strength in “practical” domains: optimizing production systems where gains translate directly to efficiency and cost savings.

This setup creates a self-reinforcing cycle: Generate → Evaluate → Evolve → Repeat. To visualize, here’s a simple process flow diagram of the feedback loop (rendered in Mermaid for clarity):

mermaid

graph TD

A[Start: Problem Prompt] --> B[LLM Ensemble Generates Code Variants]

B --> C[Automated Evaluators Score & Verify]

C --> D{Top Performers?}

D -->|Yes| E[Store in Database & Evolve via Mutations/Combinations]

D -->|No| F[Discard & Iterate]

E --> G[Prompt Sampler Builds Next Prompt]

G --> B

F --> B

style A fill:#f9f,stroke:#333

style D fill:#bbf,stroke:#333This loop isn’t just efficient—it’s scalable. In practice, it runs autonomously for days, with humans stepping in only for high-level strategy. For the nitty-gritty details, check the original paper on arXiv.

From Lab to Live: AlphaEvolve’s Real-World Victories

AlphaEvolve didn’t stay theoretical—it hit the ground running with tangible wins that saved Google time and money (per above). These aren’t lab curiosities; they’re production-ready tweaks deployed at scale.

As mentioned, these feats show AlphaEvolve’s knack for “practical” domains especially where metrics are king: faster code, lower costs, bigger impact. Here’s a quick comparison table of its early benchmarks:

The Quantum Leap: Tackling Complexity Theory’s Dark Corners

Fast-forward to October 2025: AlphaEvolve didn’t rest on its laurels. In an exciting update, it stormed theoretical computer science, proving limits on problems that underpin everything from optimization to crypto.

This wasn’t a pivot—it was an expansion, adapting the same loop to evolve “proof gadgets” and graphs for hardness proofs.

Standout breakthroughs:

MAX-4-CUT Inapproximability: Tightened the bound to 0.987 (from 0.9883) with a 19-variable gadget— a hyper-intricate puzzle piece with weights up to 1,429x prior ones. Translation: You can’t approximate optimal 4-way graph splits better than 98.7% efficiently. It’s like proving you’ll never perfectly divide a social network without massive compute.

Ramanujan Graph Extremes: Cooked up “optimal” expander graphs with epic cuts on 163 nodes (vs. old record: 10). This slams the door on average-case hardness questions, showing sparse random graphs are brutally tough to certify.

Verification Turbocharge: 10,000x speedup via branch-and-bound smarts, turning day-long checks into minutes—while keeping brute-force as the gold standard for accuracy.

These aren’t incremental; they’re frontiers humans couldn’t brute-force alone. As Philipp Schmid tweeted, it’s “human-AI collaboration at its best.”

Dive into the details via DeepMind’s research blog, Arxiv, or Schmid’s X post.

Evolution, Not Revolution: Spotting the Threads

No stark contrasts here—just seamless growth. The original AlphaEvolve crushed applied challenges (e.g., chip designs with clear speed metrics). The October push extended to theoretical wilds (e.g., infinite-scale proofs), thanks to evaluator upgrades handling combinatorial explosions.

Same DNA: LLM creativity + evolutionary grind + human guidance. It’s like upgrading from a sports car to a rocket—faster, farther, but still loves the road. This adaptability hints at AlphaEvolve’s secret sauce: framing any “evolvable” problem as code-search with verifiable scores.

The Horizon: AlphaEvolve’s Promise for Tomorrow’s Thinkers

To me, AlphaEvolve isn’t just a tool; it’s a mindset shift. By generalizing to any discrete, metric-driven domain—quantum sims, bio designs, climate models—it could slash years off discoveries. Imagine: AI evolving drug molecules or fusion controls while you sip coffee. A stretch, maybe? Then again, look at what GDM have done. As we stand today, this feels like the “AlphaGo moment for math and engineering”. Will it solve P=NP? Probably not solo—but with us? The sky’s the ultimate limit. For more, grab the full PDF whitepaper or geek out on Hacker News discussions.

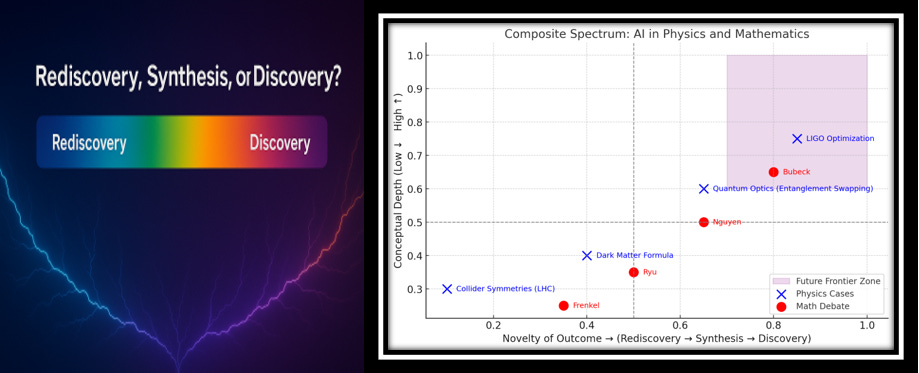

As an aside, something similar in different settings was actively debated on the release of OpenAI’s GPT-5-Pro, viewpoints of which I discussed and balanced, especially this issue with “novelty of discoveries” by AI Models/Agents:

When AI Proves Theorems and Tunes Detectors: Rediscovery, Synthesis, or (Novel) Discovery?

🌌 The Frontier of AI in Science

And this (a continuation of the above piece):

Enjoy the read(s). It has been an exciting few weeks. Or should I say few years?

https://substack.com/@interestingengineering/note/c-174830711?r=223m94