Symbolic Compression and Architecture of Thought

Why Distilled Intuition, Recurrent Hierarchies, and Externalized Reasoning Signals Matter More Than Scale (Trajectory > = Taxonomy)

🚀 Toward More Capable Minds: Beyond AGI or ASI as Buzzwords

Autonomous Generalized Systems are not just silicon analogs of human intelligence—they represent the transition from tools that serve to systems that think, plan, and evolve alongside us. In a landscape where definitions of AGI and ASI remain elusive, what truly matters is not taxonomy, but trajectory: these systems are about doing more with technology—more synthesis, more agency, more meaningful impact. They aren't endpoints, but inflection points in the pursuit of adaptive cognition.

Note on Title:

🧠 Symbolic Compression

At its core, this refers to the process of distilling complex knowledge, experiences, or patterns into compact, manipulable symbols. It’s how models begin to abstract:

Turning long chains of reasoning into reusable "mental shortcuts" or tokens of logic.

Capturing high-level strategies, goals, or insights into smaller, actionable representations.

Creating symbols not just of things, but of processes—like intuition distilled from prior decision patterns.

In systems terms, symbolic compression can help:

Reduce computational overhead by reusing high-value heuristics.

Enable transfer learning across domains with minimal re-training.

Surface “conceptual primitives” that shape how models think and generalize.

🏗️ Architecture of Thought

This speaks to how reasoning emerges structurally inside AI systems:

How modules like memory, attention, planning, and reflection interrelate.

Whether models engage in recurrent reasoning loops, externalize thought, or compose abstract mental maps.

The blueprint behind how signals—like goals, constraints, or causal knowledge—travel, transform, and integrate.

It’s not just about weights and tokens—it’s about cognitive design:

Does the system treat reasoning as linear chain, graph traversal, or symbolic interplay?

How are decisions scaffolded over time?

Are thoughts compressed into inner representations, or expanded across multi-agent structures?

The AI landscape is shifting towards sophisticated reasoning in LLMs. With tool-use and autonomous planning and self-verification, proto-agentic systems are evolving with it. However, these systems require their various “reasoning” and tool-use capabilities in wider, more generalized fields, under safer guardrails and alignment—before everybody jumps in with “unfeathered enthusiasm”.

The “shifting sands of creativity,” however, land in our e-mailboxes every day, and I cannot help but be excited about the future.

Human beings are adaptable. Whilst jagged edges will prevail for a while, I would like to think we will also thrive in the long term. This is a follow-up, slightly detailed breakdown of yesterday's summary notes - it includes the “deluge” of interesting model releases in the past week (I believe one of the highest levels of releases), many innovative - 🚀Cogito v2, GLM-4.5, HRM, Falcon-H1🚀. Alright, on with it! What I will cover:

Key trends:

Architectural Innovations: Widespread Mixture-of-Experts (MoE) and hybrid Transformer-State Space Model (SSM) designs for efficiency and performance.

Advanced Post-Training: Reinforcement learning and distillation are crucial for refining model behaviors and cognitive abilities.

Proto-Agentic Systems: Models are moving towards tool use, autonomous planning, and self-verification.

Regional Approaches:

Chinese Developers: Often emphasize unified dual-mode operations (thinking vs. non-thinking) and externalized reasoning aids (e.g., knowledge graphs).

US Developers: Explore iterated distillation for "intuitive" reasoning and transparent, verifiable reward-based training.

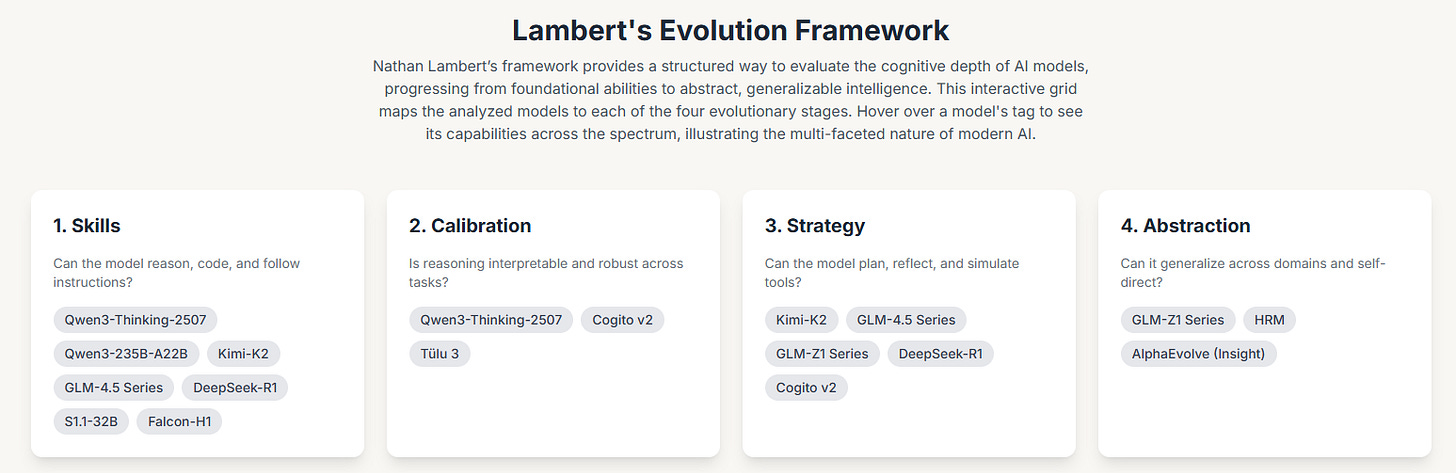

Lambert's Framework: Models show clear progression through Skills, Calibration, Strategy, and Abstraction, moving towards robust, interpretable, and generalized intelligence.

Future Implications: Meta-learning systems like AlphaEvolve suggest AI may autonomously refine its own algorithms and architectures, accelerating self-improvement.

Mapping the Modern Evolution of Reasoning Models

The Imperative for Advanced Reasoning in AI

Shift in AI: From pattern recognition to complex, multi-step reasoning.

Driving Force: Demand for robust, interpretable, and generalizable AI for real-world applications (e.g., science, engineering, medicine, autonomous systems).

Goal: AI as an intelligent partner, capable of reasoning, planning, and adapting.

Report Objectives and Scope

Analyze & Contrast: Recent AI models (reasoning-optimized, proto-agentic) from Chinese and US developers.

Focus Areas: Architectural uniqueness, training/post-training efficiency, model size vs. performance scaling, reasoning modalities.

Evaluation Framework: Nathan Lambert’s progression framework (Skills → Calibration → Strategy → Abstraction).

Symbolic Comparisons: Illustrate convergence/divergence of distillation, architectural influence, and agentic planning.

Closed-Source Learning: Reflect on implications from systems like DeepMind's AlphaEvolve.

Introduction to Nathan Lambert’s Progression Framework

Architectural Innovations and Reasoning Modalities

1. Mixture-of-Experts (MoE) Architectures: Scaling for Specialized Intelligence

MoE designs enable vast parameter counts with efficient inference by activating only a subset of parameters.

Qwen3-235B-A22B & Qwen3-Thinking-2507 (Chinese) :

Architecture: 235B total parameters, 22B active per token across 94 layers.

Key Feature: Unified "thinking mode" for complex reasoning and "non-thinking mode" for rapid responses.

Innovation: "Thinking budget" for dynamic resource allocation, optimizing reasoning cost.

Kimi-K2 & Kimi-K2-Instruct (Chinese) :

Architecture: 1 trillion total parameters, 32B active.

Key Feature: "Post-trained in reasoning before acting and planning" for token conservation and clear decision-making.

Innovation: Internalized/compiled reasoning for efficiency, effective for real-time agentic applications.

GLM-4.5 / GLM-4.5-Air (Chinese) :

Architecture: GLM-4.5 (355B total, 32B active); GLM-4.5-Air (106B total, 12B active).

Key Feature: Dual-mode toggle ("thinking" for complex tasks, "non-thinking" for instant responses).

Innovation: Unifies capabilities for reasoning, coding, and agentic tasks, simplifying deployment.

DeepSeek-v3 (Chinese) :

Architecture: 671B total parameters, 37B active per token. Integrates Multi-head Latent Attention (MLA) and DeepSeekMoE.

Innovation: Auxiliary-loss-free strategy for load balancing; multi-token prediction objective for performance and cost-efficiency.

Training: Stable pre-training on 14.8 trillion tokens with "zero loss spike."

Cogito v2 (US) :

Architecture: Dense (70B, 405B) and MoE (109B, 671B) variants.

Key Feature: "Self-improvement via distillation" and "iterative policy improvement" to internalize reasoning.

Innovation: Achieves significantly "shorter reasoning chains" (60% shorter than DeepSeek R1) by fostering "intuition" for solutions, leading to faster inference and lower token costs.

2. Hybrid and Recurrent Architectures: Beyond Pure Transformers

Falcon-H1 (US) :

Architecture: Parallel hybrid combining Transformer attention with Mamba-based State Space Models (SSMs).

Innovation: Independent adjustment of attention and SSM head ratios for performance and efficiency.

Efficiency: Smaller models (e.g., 0.5B, 1.5B-Deep) rival much larger traditional models (7B-10B). Strong multilingual capabilities (18 languages).

HRM (Hierarchical Reasoning Model) (US) :

Architecture: Novel recurrent architecture with 27 million parameters.

Inspiration: Hierarchical and multi-timescale processing in the human brain.

Key Feature: Two interdependent recurrent modules: high-level (slow, abstract planning) and low-level (rapid, detailed computations).

Innovation: Achieves computational depth in a single forward pass without explicit CoT supervision; uses "hierarchical convergence" to prevent premature convergence. Exceptional performance on complex reasoning tasks (Sudoku, maze, ARC-AGI) with ~1000 training samples, no pre-training or CoT data.

3. Dedicated Reasoning Modules and Modes: Tailoring for Cognitive Processes

Qwen3's thinking mode : Dynamic switching between complex reasoning and rapid response, managed via chat templates and a thinking budget.

GLM's dual-mode toggle : In GLM-4.5/Air, offers distinct thinking and non-thinking modes for complex reasoning, tool use, and instant responses.

GLM-Z1 / GLM-Z1-Air / Z1-Rumination (Chinese) :

Framework: "Agentic Reasoning" integrating external tool-using agents (web search, code execution).

Key Component: "Mind-Map" agent builds a structured knowledge graph for reasoning context and logical relationships.

Z1-Rumination: Focuses on "deeper and longer thinking" for open-ended, complex problems, integrating search tools during deep thought.

DeepSeek-R1 (Chinese) :

Training: Explores reasoning capabilities through Reinforcement Learning (RL) (DeepSeek-R1-Zero).

Pipeline: Multi-stage training (cold-start data, two RL stages, two SFT stages) to refine emergent reasoning and align with human preferences.

Capabilities: Self-verification, reflection, and generation of long Chain-of-Thoughts (CoTs).

S1.1-32B (US) :

Focus: "Budget forcing" to control test-time compute and improve reasoning.

Mechanism: Forcefully terminates or lengthens thinking using "Wait" tokens, prompting self-correction.

Distillation: Distilled from DeepSeek-R1 traces, achieves high performance on competition math with minimal training data (1000 examples).

Cogito v2's Iterated Distillation and Amplification (IDA) : Two-step loop of inference-time reasoning and iterative policy improvement, distilling search discoveries back into the model to enhance "intuition" and shorten reasoning chains.

HRM's latent internal reasoning : Performs sequential reasoning in a single forward pass without explicit CoT, leveraging dual recurrent modules and hierarchical convergence.

Post-Training Techniques and Efficiency Paradigms

1. Reinforcement Learning and Preference Optimization: Shaping Behavior for Performance

DeepSeek-R1 (Chinese) :

Pipeline: Two RL stages and two SFT stages.

RL-Emergence: DeepSeek-R1-Zero showed reasoning capabilities emerging purely from RL, without initial SFT.

Refinement: Combines emergent RL capabilities with SFT for human alignment and readability.

Kimi-K2 (Chinese) :

Process: Multi-stage post-training with large-scale agentic data synthesis pipeline and joint RL stage.

Learning: Improves through interactions with real and synthetic environments.

Tülu 3 (AI2) (US) :

Methods: Supervised Finetuning (SFT), Direct Preference Optimization (DPO), and Reinforcement Learning with Verifiable Rewards (RLVR).

Focus: Refines core skills (reasoning, math, coding, safety) using public, synthetic, and decontaminated data.

RLVR: Provides objective, verifiable feedback for specific, measurable skills (e.g., math problems), leading to robust skill acquisition.

OLMo 2 (AI2) (US) :

RLVR Extension: Extends Tülu 3's final-stage RLVR.

Two-Stage Pretraining:

Stage 1 (Longest): Broad web-sourced data (e.g., OLMo 2 Mix 1124, 3.9 trillion tokens).

Stage 2 (Mid-training): Specialized, high-quality (often synthetic) data (Dolmino Mix 1124) to infuse knowledge and patch skill deficiencies (e.g., math).

Impact: Mid-training dramatically improves performance (e.g., OLMo 2 7B improved 10.6 points).

Checkpoint Soups: Final models obtained by averaging multiple checkpoints for better performance.

2. Distillation and Efficiency-Focused Training: Maximizing Performance per Compute

DeepSeek-R1's distillation (Chinese) :

Finding: Reasoning patterns from larger models can be distilled into smaller models, outperforming direct RL on smaller models.

Impact: Open-sourcing distilled Qwen and Llama models makes advanced reasoning accessible.

S1.1-32B (US) :

Efficiency: Achieved with minimal training data (1000 high-quality reasoning examples) and "budget forcing."

Leverage: Distills from DeepSeek-R1 traces, showing inference-time interventions can yield significant reasoning gains.

Cogito v2's Iterated Distillation and Amplification (IDA) (US) :

Mechanism: Distills inference-time search discoveries back into model parameters, refining "intuition" and leading to shorter reasoning chains.

Cost-Efficiency: Combined training cost for multiple large models was less than $3.5 million, challenging the "compute-is-all" narrative.

Falcon-H1's parameter efficiency (US) :

Result: Direct consequence of its hybrid architecture.

Performance: Achieves performance competitive with models twice its size (e.g., 0.5B rivals 7B, 1.5B-Deep rivals 7-10B).

OLMo 2's transparency and two-stage pretraining (US) :

Transparency: Releases all artifacts openly (models, data, code, logs, checkpoints).

Impact: Fosters collaborative research and reproducibility, accelerating collective progress by demonstrating competitive performance with full transparency.

Agentic Capabilities and Strategic Depth

1. Tool Use and Autonomous Planning: Bridging LLMs with the World

Kimi-K2 (Chinese) :

Design: Strengths in agentic capabilities, excelling in tool use and long-horizon planning.

Training: Multi-stage post-training with large-scale agentic data synthesis and joint RL.

GLM-4.5 / GLM-4.5-Air (Chinese) :

Optimization: Foundation models for agentic tasks with 128K context length and native function calling.

Performance: Robust in agentic coding, full-stack development, and creating sophisticated artifacts (mini-games, simulations, presentations).

Tool Calling: Achieves high average tool calling success rate (90.6%), outperforming Kimi-K2 and Qwen3-Coder.

GLM-Z1 / GLM-Z1-Rumination (Chinese) :

Framework: "Agentic Reasoning" dynamically integrates external tools (web search, code execution).

GLM-Z1-Rumination: Focuses on "deeper and longer thinking" for open-ended problems, integrating search tools.

Mind-Map Agent: Structured memory (knowledge graph) maintains coherence in long reasoning chains.

DeepSeek-R1 (Chinese): Strong performance on coding and mathematical reasoning benchmarks, implying robust foundation for tool-augmented reasoning.

Cogito v2 (US): Emergent property of "reasoning over the visual domain by pure transfer learning" despite text-only training, due to multimodal base model.

2. Self-Verification and Reflection Mechanisms: Building Trustworthy Autonomy

DeepSeek-R1 (Chinese): DeepSeek-R1-Zero (pure RL) demonstrates "self-verification" and "reflection" capabilities.

S1.1-32B (US): "Budget forcing" mechanism compels continued thinking, allowing it to "double-check its answer, often fixing incorrect reasoning steps."

Cogito v2 (US): "Iterative policy improvement" distills search discoveries back into the model, improving "intuition" for the "right trajectory," an internalized form of reflection.

Evaluation through Lambert’s Evolution Framework

1. Skills: Can the model reason, code, and follow instructions?

Models show strong foundational abilities:

GLM-4.5 / GLM-4.5-Air: Unify reasoning, coding, agentic capabilities; comprehensive full-stack development.

DeepSeek-R1: Strong in math (AIME 2024, MATH-500) and expert-level coding (Codeforces Elo).

S1.1-32B: Excels in competition math (MATH, AIME24).

Qwen3-235B-A22B / Qwen3-Thinking-2507: State-of-the-art in code generation, math, agent tasks; expanded multilingual capabilities.

Kimi-K2: Robust in coding (LiveCodeBench, OJBench), math (AIME 2025), general reasoning (GPQA-Diamond), and agentic strengths.

Falcon-H1: Leadership across reasoning, math, multilingual understanding, instruction-following, and scientific knowledge.

2. Calibration: Is reasoning interpretable and robust across tasks?

Focus on transparency, controllability, and robustness:

Cogito v2: Reduces misalignment risk via Iterated Distillation and Amplification (IDA), internalizing aligned behaviors and achieving shorter, more interpretable reasoning chains.

Qwen3-Thinking: Provides control over reasoning via explicit "thinking budget," enhancing predictable and robust behavior.

Tülu 3: Employs Reinforcement Learning with Verifiable Rewards (RLVR) for objective, verifiable feedback, leading to robust and accurate skill development in domains like mathematics.

3. Strategy: Can the model plan, reflect, and simulate tools?

Models demonstrate higher-level cognitive functions:

Kimi-K2: Excels in long-horizon planning and tool use; post-training embeds reasoning before acting.

GLM-Z1-Rumination: Engineered for "deeper and longer thinking" on "open-ended and complex problems," integrating search tools and using a Mind-Map agent for structured memory.

Cogito v2: "Iterative policy improvement" distills internal "search" discoveries, enabling learning to plan and reflect on reasoning trajectories.

DeepSeek-R1: DeepSeek-R1 demonstrates "self-verification, reflection, and generating long CoTs," indicating internal strategic self-assessment.

4. Abstraction: Can it generalize across domains and self-direct?

The pinnacle of AI intelligence:

HRM (Hierarchical Reasoning Model): Achieves exceptional performance on complex reasoning (Sudoku-Extreme, Maze-Hard, ARC-AGI) with minimal data (~1000 samples) and parameters (27M), without pre-training or CoT. Its bio-inspired architecture suggests fundamental generalization.

GLM-Z1-Air / Z1-Rumination: Z1-Rumination focuses on "deeper and longer thinking" for "open-ended and complex problems" . GLM-Z1-Air is a "lightweight agentic reasoning" model, implying efficient generalization of agentic behaviors.

AlphaEvolve (DeepMind) (Closed-Source Insight) :

Evolutionary Approach: Autonomous pipeline of LLMs (Gemini Flash for breadth, Gemini Pro for depth) iteratively improves algorithms by modifying code and receiving feedback .

Algorithmic Discovery: Discovered novel, provably correct algorithms, including a 4x4 complex matrix multiplication procedure with 48 scalar multiplications (improving Strassen's 1969 algorithm after 56 years) .

Symbolic Compression: Ability to identify and optimize underlying symbolic representations .

Modular Planning: Leverages a "modular agent architecture" for extendable/replaceable components .

Architectural Pruning: Hints at identifying and optimizing components for efficiency, even optimizing low-level GPU instructions.

Self-Improvement: Can optimize its own training processes and contribute to hardware optimization, suggesting recursive intelligence gains.

Thoughts

The evolution of AI reasoning models is a dynamic interplay of innovation and strategic development. It is and will continue to be an exiting space to watch. Lines blurr between classifying models specifically into only “reasoning” or “agentic” buckets, because “the system” works best with both orchestrating together. It’s not easy to manage, and there are issues like adversarial attacks that need better guardrails; alignment with human values etc. The pace however, will not be slowing down anytime soon. For many concerned, the Stakes are simply too high. Or you could also say - “Because ……To the Moon with Valuations”. No pun intended.

Convergent Strategies:

Chinese Models (Qwen3, GLM-4.5): Unified dual-mode architectures for versatility and cost-efficiency.

US Models (Cogito v2): Iterated Distillation and Amplification (IDA) for "intuitive," efficient reasoning.

External vs. Internal Reasoning:

Chinese (GLM-Z1): Emphasize externalized reasoning aids like knowledge graphs for robustness.

US (Cogito v2): Focus on internalized, compiled reasoning for speed and conciseness.

Efficiency & Transparency:

Techniques: Budget forcing (S1.1-32B), verifiable rewards (Tülu 3), novel architectures (Falcon-H1, HRM) for performance per compute.

Openness (OLMo 2): Full transparency accelerates community research.

Lambert's Framework Progression:

Skills: Strong foundational abilities (coding, math, instruction following).

Calibration: Improving interpretability, controllability, and robustness.

Strategy: Advanced planning, tool use, and self-reflection.

Abstraction: Emerging capabilities in generalization and self-direction (HRM, AlphaEvolve), pointing to future autonomous discovery.

The field is moving towards AI systems that are not only more intelligent but also more efficient, interpretable, and capable of autonomous interaction and self-improvement. It is a creative space, that merges many disciplines. I am optimistic about it’s various futures.

References Used:

Qwen3 Technical Report, https://arxiv.org/pdf/2505.09388

Qwen/Qwen3-235B-A22B-Thinking-2507 - Hugging Face, https://huggingface.co/Qwen/Qwen3-235B-A22B-Thinking-2507

[2505.09388] Qwen3 Technical Report - arXiv, https://arxiv.org/abs/2505.09388

[2507.20534] Kimi K2: Open Agentic Intelligence - arXiv, https://arxiv.org/abs/2507.20534

(PDF) Kimi K2: Open Agentic Intelligence - ResearchGate, https://www.researchgate.net/publication/394081339_Kimi_K2_Open_Agentic_Intelligence

Qwen3-235B-A22B-2507 : r/LocalLLaMA - Reddit, https://www.reddit.com/r/LocalLLaMA/comments/1m5ox8z/qwen3235ba22b2507/

GLM4.5 released! : r/LocalLLaMA - Reddit, https://www.reddit.com/r/LocalLLaMA/comments/1mbg1ck/glm45_released/

GLM-4.5: Reasoning, Coding, and Agentic Abililties - Z.ai, https://z.ai/blog/glm-4.5

DeepSeek-V3 Technical Report - arXiv, https://arxiv.org/html/2412.19437v1

Paper Review of S1: Simple test-time scaling | by Jonathan DeGange | Medium, https://medium.com/@jdegange85/paper-review-of-s1-simple-test-time-scaling-6094eff9c1e8

Falcon-H1: A Family of Hybrid-Head Language Models Redefining Efficiency and Performance - arXiv, https://arxiv.org/html/2507.22448v1

Falcon LLM Team Releases Falcon-H1 Technical Report: A Hybrid Attention–SSM Model That Rivals 70B LLMs - MarkTechPost, https://www.marktechpost.com/2025/08/01/falcon-llm-team-releases-falcon-h1-technical-report-a-hybrid-attention-ssm-model-that-rivals-70b-llms/

[2507.22448] Falcon-H1: A Family of Hybrid-Head Language Models Redefining Efficiency and Performance - arXiv, http://www.arxiv.org/abs/2507.22448

arXiv:2504.14985v2 [cs.CR] 23 Apr 2025, https://arxiv.org/pdf/2504.14985

[2506.13131] AlphaEvolve: A coding agent for scientific and algorithmic discovery - arXiv, https://arxiv.org/abs/2506.13131

AlphaEvolve: A Gemini-powered coding agent for designing advanced algorithms, https://deepmind.google/discover/blog/alphaevolve-a-gemini-powered-coding-agent-for-designing-advanced-algorithms/

OpenAlpha_Evolve is an open-source Python framework inspired by the groundbreaking research on autonomous coding agents like DeepMind's AlphaEvolve. - GitHub, https://github.com/shyamsaktawat/OpenAlpha_Evolve

Hierarchical Reasoning Model - arXiv, https://arxiv.org/html/2506.21734v1

Hierarchical Reasoning Model - Hacker News, https://news.ycombinator.com/item?id=44699452

Kimi K2: Open Agentic Intelligence - YouTube,

Tülu 3: Pushing Frontiers in Open Language Model Post-Training - arXiv, https://arxiv.org/html/2411.15124v3

[2501.00656] 2 OLMo 2 Furious - arXiv, https://arxiv.org/abs/2501.00656

zai-org/GLM-Z1-Rumination-32B-0414 - Hugging Face, https://huggingface.co/zai-org/GLM-Z1-Rumination-32B-0414

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning, https://arxiv.org/html/2501.12948v1

Tülu 3: Pushing Frontiers in Open Language Model Post-Training - arXiv, https://arxiv.org/pdf/2411.15124

Iterated Distillation and Amplification | by Ajeya Cotra | AI Alignment, https://ai-alignment.com/iterated-distillation-and-amplification-157debfd1616