The Grandmaster's Gambit: How AI Learns to Think Like a Chess Champion

A Study in Strategic Decision-Making and the Art of 'Thinking Fast and Slow'

A few hours ago, Norway Chess 2025 settled on winners. A few of the rounds esp the one Gukesh played with Fabiano, was (I must admit) exXtremely painful to watch. Seriously! Painful! And I quote:

GM Magnus Carlsen has won his seventh Norway Chess title after escaping then almost winning a lost position against GM Arjun Erigaisi. With seconds to spare, he took a draw, which seemed to guarantee the title as GM Fabiano Caruana was beating GM Gukesh Dommaraju, but then Caruana let the win slip... only for the world champion to make the last mistake. Gukesh was inconsolable as he realized his title chances were gone, though he still took third place ahead of GM Hikaru Nakamura, who lost to GM Wei Yi in armageddon.

~ Source: Chess.com

So I will rewind the clock a bit, approximately 4 days ago, to Round 6 - where there was still hope of a title win - for Gukesh. Don’t get me wrong - Magnus (for all his bravado and brilliance), still 100% reigns supreme, in my book. He is the very best of GrandMasters. But, you know - I can’t help rooting for the person I deemed the “under-dog” (yes GrandMaster Gukesh excels at Classical Chess, but you know..). “Under-dog”.

To the very many who watched (and then recalculated and rewatched the moves), a historic moment: in Round 6 Norway Chess 2025, the young Indian prodigy D. Gukesh defeated the reigning World Champion Magnus Carlsen in a classical game. This wasn't just a win; it was a testament to Gukesh's tenacity, tactical brilliance, and uncanny ability to navigate the most complex, uncertain moments of a game. Okay fine - Magnus did that questionable 44...f6?! Gukesh was mostly taking move after move to survive, until (what I think) was that “mistake” move by Magnus. Perhaps the issue is the length of time needed for classical chess? Magnus (and Hikaru) have said they may consider not playing the “long games” in favor of faster chess. We’ll see.

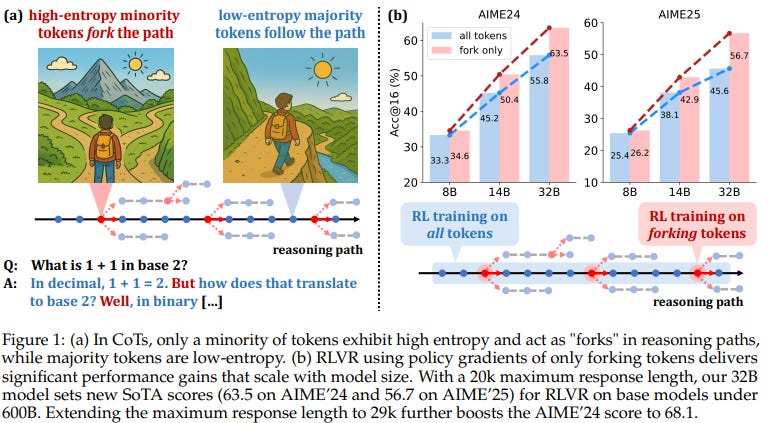

But on the very same day, waiting for the next Chess Round 7, 2nd June 2025, I happened to be reading a Qwen paper submitted on Arxiv “Beyond the 80/20 Rule: High-Entropy Minority Tokens Drive Effective Reinforcement Learning for LLM Reasoning”.

In (what I deem to be) a striking parallel, cutting-edge research in Artificial Intelligence is revealing a profound truth: the most effective way to train Large Language Models (LLMs)—advanced AI systems capable of understanding and generating human language—to reason, isn't by teaching them every single obvious step, but by honing their ability to make critical decisions at these very same "uncertain" junctures. This quest for refined decision-making in AI finds a powerful conceptual mirror in the diverse landscape of human chess, particularly in the stark differences between "thinking fast" and "thinking slow" that define the greatest grandmasters.

The LLM's Inner Game: High-Entropy Thinking

Imagine an LLM solving a complex problem step-by-step, a process known as Chain-of-Thought (CoT) reasoning. Each word or sub-word unit it generates is called a "token." The predictability of the next token is measured by its "entropy," a concept surprisingly akin to the predictability of a chess move.

Low-Entropy Tokens ➡️: These are highly predictable tokens, where the LLM is very confident about what comes next. Think of them as the routine, almost automatic moves in a chess game – like developing a knight to a standard square in the opening, or a forced capture. Examples include common word endings ("-ing," "-ly"), standard code syntax (

if,else), or predictable parts of a mathematical equation (e.g., "1 + 1 = 2"). These tokens primarily complete linguistic structures or follow established, unambiguous reasoning paths, requiring minimal "thought" from the LLM.High-Entropy Tokens 🎯: These are the unpredictable tokens, where the LLM faces genuine uncertainty and has multiple plausible options. These are the "forking tokens"—critical decision points that can steer the reasoning down entirely different paths. Examples include words like "however," "unless," "because," "suppose," or "define." Just as a chess player might ponder whether to attack, defend, or exchange pieces, an LLM at a high-entropy token is weighing strategic alternatives, where different choices can lead to vastly different outcomes in its reasoning process.

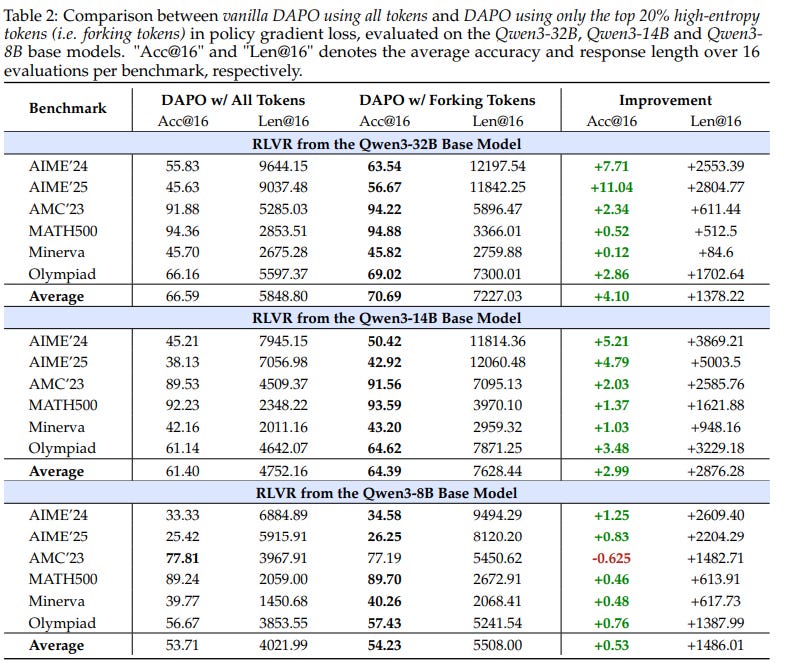

The groundbreaking insight from the paper "Beyond the 80/20 Rule: High-Entropy Minority Tokens Drive Effective Reinforcement Learning for LLM Reasoning," is that in an LLM's CoT, only a small fraction of tokens (a "minority") are these high-entropy "forks," while the vast majority are low-entropy "followers." This discovery is significant because it suggests that training efforts should be disproportionately focused on these critical few tokens—much like a chess coach focusing on a student's decision-making in complex middlegame positions rather than drilling basic opening moves.

The research introduces a refined training method called Refined DAPO (Dynamic sAmpling Policy Optimization). Instead of updating the model's learning across all tokens, this technique strategically focuses its "policy gradient updates" (the adjustments the model makes to its internal "strategy" or "behavior") only on these high-entropy minority tokens. The gradients for the low-entropy tokens are effectively "masked" or ignored during this crucial learning phase. This masking is crucial because it prevents the model from over-optimizing on trivial, predictable steps, allowing it to dedicate its learning capacity to the most impactful decision points.

The LLM's Learning Flow looks something this:

Base LLM 🤖

➡️ CoT Generation 💭

➡️ Token Entropy Calculation 📊

➡️ Identification of High-Entropy Minority Tokens, a “Fork” (e.g., top 20%) 🎯

➡️ Refined DAPO (RLVR with focused gradient updates on high-entropy tokens) 🚀

➡️ Final Enhanced Reasoning Model ✨

Why Focus on the 'Forks'? The Efficiency and Precision of Targeted Learning

This targeted approach to LLM training offers significant advantages. By concentrating gradient updates on high-entropy tokens, Refined DAPO ensures that the model's learning is directed towards the most impactful aspects of its reasoning process. It's akin to a student focusing their study time on the most challenging exam questions rather than re-reading already mastered material. This precision in learning yields remarkable results: LLMs trained this way achieve performance comparable to, or even significantly surpass, models trained on all tokens, especially for larger LLMs.

Furthermore, this method promotes genuine understanding and generalization over rote memorization. Traditional Supervised Fine-Tuning (SFT) can sometimes lead LLMs to "memorize" reasoning paths, effectively reducing the entropy of critical "forking" tokens and limiting their ability to adapt to novel problems. By contrast, focusing on high-entropy tokens forces the LLM to learn how to make robust decisions when faced with uncertainty, leading to more flexible and powerful reasoning capabilities. This enhanced model doesn't necessarily generate more high-entropy tokens, but it makes smarter choices at those critical junctures, leading to more robust and often more detailed reasoning, even for complex, unseen problems [1].

Chessboard Decisions: Where Grandmasters "Fork" and "Think Fast and Slow"

This concept of entropy I believe, applies beautifully to chess, forming the bedrock of how human grandmasters navigate the game, often also seen through the lens of Daniel Kahneman's "Thinking, Fast and Slow."

Low-Entropy Moves ➡️: These are forced moves, highly predictable sequences, or standard opening lines where the "best" move is obvious and there are few viable alternatives. Many early game moves fall into this category, as do tactical sequences where a checkmate or material gain is unavoidable. Such moves are often handled by System 1 thinking—fast, intuitive, and automatic, requiring little conscious effort.

High-Entropy Moves 🎯: These are positions where multiple viable moves exist, and the "best" move isn't immediately clear. The evaluation difference between the top moves might be very small, making the choice complex and uncertain. These are the "forking points" in a chess game, where a grandmaster must dig deep into some form of spatial-learning calculation, relying on intuition, pattern recognition, and vast experience to choose the optimal path. Navigating these moments primarily engages System 2 thinking—slow, deliberate, and effortful, demanding conscious attention and deep computation.

Grandmasters don't just memorize millions of positions; they master the application of their chess understanding. They excel at navigating these high-entropy situations by drawing upon both systems:

Deep Calculation (System 2): Analyzing many moves ahead, exploring various branches of the "game tree", a painstaking process requiring focused attention.

Pattern Recognition (System 1, built by System 2 practice): Instantly recognizing familiar patterns from their vast experience, which gives them rapid access to potential moves and strategies. This intuitive leap is the result of countless hours of deliberate System 2 training.

Strategic Intuition (System 1): Making "intuitive" discoveries of good moves, often based on unreflective, automatic processes built from years of practice.

Generalization (Synergy of Systems): Adapting previous knowledge to the current, unique context, blending their intuitive grasp with precise calculation.

The Chess Arena: A Test of Pace and Precision

The diversity in chess time controls directly tests these different cognitive systems. The Norway Chess Championship, in particular, with its unique format, is a compelling stage to observe how the world's best adapt their "thinking systems" under varying time pressures.

1. Classical Chess: The Crucible of Deep Thought (System 2 Dominance)

This is the traditional form of chess, where depth of calculation and strategic planning take precedence over speed.

Time Control: In Norway Chess 2025, each player is allotted 120 minutes (2 hours) for the entire game, with an additional 10 seconds per move starting from move 41.

Characteristics: With ample time, players predominantly engage their System 2 thinking—slow, deliberate, and effortful analysis. This leads to fewer blunders, meticulous strategic planning, and often higher-quality, complex games that test a player's endurance and systematic problem-solving abilities.

2. Armageddon Chess: The Ultimate Test of Intuition Under Pressure (System 1 in Overdrive)

This sudden-death tie-break is played immediately after a classical game ends in a draw, guaranteeing a decisive outcome for match points.

Time Control: A stark contrast to classical, White receives 10 minutes, while Black gets a mere 7 minutes. Both players receive a 1-second increment starting from move 41.

Draw Odds: Black has "draw odds," meaning if the game ends in a draw by any means (e.g., stalemate, repetition), Black is declared the winner. This rule injects immense tension, forcing White to play for a win while Black aims to survive.

Characteristics: Armageddon chess is a thrilling display of System 1 thinking—fast, intuitive, and often automatic responses. Players must manage their time ruthlessly, relying on quick pattern recognition, tactical reflexes, and sheer nerves to perform under extreme pressure. It's a high-stakes sprint where one slip can be fatal.

The Chess Grandmasters: A Spectrum of Cognitive Styles

The elite of chess, while all sharing a profound understanding of the game, exhibit distinct strengths and styles when operating across these different time controls.

Magnus Carlsen: The Universal Chess God (with a penchant for System 1 dominance)

Best At: Practically everything, but his absolute mastery lies in Rapid and Blitz. As a former long-reigning Classical World Champion, his dominance in faster formats is arguably unparalleled, with multiple World Rapid and World Blitz titles to his name.

Why: Carlsen's strength stems from his "universal" playing style and his uncanny ability to convert minute advantages into wins. He is a master of positional subtleties and endgames, relentlessly pressuring opponents. In faster time controls, his intuition and pattern recognition (System 1) are incredibly sharp, allowing him to find excellent moves at lightning speed and exploit opponents' time trouble. He rarely makes clear blunders and thrives in chaotic, complex scenarios. He has even publicly expressed a desire to shift focus more towards faster chess.

"Thinking Fast and Slow": Carlsen's System 1 is arguably the most refined among all players, enabling him to consistently make excellent decisions almost instinctively, even in complex positions.

D. Gukesh: The Rising Star with Classical Depth (System 2 focused)

Best At: Primarily Classical Chess. His impressive performance, including his victory over Magnus Carlsen at Norway Chess 2024, and his subsequent qualification for the World Championship match, underscore his prowess in the longest format.

Why: Gukesh is known for his sharp and precise calculation. He approaches each position as a meticulous puzzle, demonstrating a strong reliance on deep analysis and logical deduction (System 2). As Magnus Carlsen himself noted, Gukesh is "meticulous, he calculates, he sees every position as a problem he has to solve, more than what does my intuition tell me?". His ability to find critical "only moves" in complex and dangerous positions speaks volumes about his profound calculation abilities.

"Thinking Fast and Slow": Gukesh's strength lies in his highly developed System 2. He thrives when he has ample time to think, calculate, and construct precise plans. While he is rapidly improving in faster formats, his current niche is where deep, methodical thought is paramount.

Hikaru Nakamura: The King of Online Chess (System 1 Master)

Best At: Unquestionably, Blitz and Bullet Chess. Nakamura has dominated online speed chess for years, consistently winning major online tournaments and holding multiple World Blitz Champion titles.

Why: Nakamura's playing style is aggressive, dynamic, and highly tactical [3]. He often employs unorthodox openings, thrives on creating imbalances, and possesses an incredible speed of thought. His intuitive grasp of complex positions makes him nearly invincible in faster time controls. He can calculate tactical lines at an astonishing pace, and his ability to win games by "flagging" opponents (winning on time) even from worse positions is legendary. While a formidable classical player and Candidates participant, his true realm of unparalleled dominance is where speed reigns supreme.

"Thinking Fast and Slow": Nakamura is the epitome of a System 1 player. His brain seems wired for rapid-fire decision-making, instantly recognizing patterns and generating responses. He's honed his intuition to such a degree that it often appears as lightning-fast calculation.

Fabiano Caruana: The Classical Tactician (System 2 Virtuoso)

Best At: Classical Chess. Caruana is renowned for his meticulous preparation, deep positional understanding, and exceptional tactical precision in long games. He is a former World Championship challenger in classical chess.

Why: Caruana's strength lies in his thorough opening preparation, logical approach, and ability to find subtle advantages in complex positions. He excels in squeezing wins from seemingly equal endgames and is known for his concrete, highly accurate play. He is less prone to tactical oversights in classical games due to his exhaustive calculation (System 2). While he boasts strong rapid and blitz ratings, he can sometimes show vulnerabilities under severe time pressure, as evidenced by instances in recent Norway Chess tournaments where time trouble led to critical errors.

"Thinking Fast and Slow": Caruana is a master of System 2 thinking. His methodical approach, deep understanding of principles, and precise calculation make him extremely difficult to beat in classical chess. He prioritizes accuracy and logic, which sometimes comes at the cost of speed in faster time controls.

Gukesh vs. Carlsen: A High-Entropy Chess Lesson in "Thinking Fast and Slow"

The specific encounter between D. Gukesh and Magnus Carlsen at Norway Chess 2025 provides a vivid illustration of how these high-entropy decision points and the interplay of "thinking fast and slow" play out on the board. Carlsen, known for his near-flawless play and exceptional System 1 intuition, had largely outplayed Gukesh for much of their game. Experts believed it was only a matter of time before Carlsen would convert his advantage into a win.

However, as the game entered a complex endgame under time pressure, Carlsen made a critical blunder—a knight miscalculation. This was a high-entropy moment, a "fork" where the optimal path was not straightforward, and the pressure amplified the uncertainty. Carlsen's failure to correctly navigate this complex, high-entropy situation, likely due to a rare lapse in his formidable System 1 under duress or insufficient System 2 validation, led to his downfall. Yes yes, each to watch, see and comment. But hey - fun to re-think about!

Gukesh, on the other hand, displayed incredible resilience and "superior calculation". Even when objectively worse, he "continued to find only moves to keep the game going". These "only moves" are often found in high-entropy positions where many alternatives seem plausible, but only one or a few maintain the game. Gukesh's ability to find these dynamic, precise moves in the face of complexity—a testament to his strong System 2 calculation even under pressure—allowed him to capitalize on Carlsen's error and turn the game around, securing a historic victory. His win in Round 6 was a testament to his ability to excel at these critical decision points, even when the odds seemed stacked against him. Pity about today. I actually feel his pain.

The Grandmaster's Mind and the Enhanced LLM: Weaving the Learnings

Anyway, the parallels between the LLM research and grandmaster chess are striking and deeply interconnected through the idea of mastering uncertainty and adapting thinking speed.

Mastering the "Forks": Both the enhanced LLM and the grandmaster achieve superior performance by mastering the "forking points"—the high-entropy moments where critical decisions must be made. For LLMs, this means refining their choices at tokens like "however" or "suppose." For grandmasters, it means finding the optimal move when the board presents numerous viable, yet uncertain, options, regardless of the time control.

Strategic Exploration: The 80/20 paper highlights that focusing on high-entropy tokens enhances "exploration" in LLMs, allowing them to discover better reasoning paths. Similarly, grandmasters "explore" different lines of play through deep calculation (System 2), considering various possibilities before committing to a move. In faster games, this exploration becomes more intuitive (System 1).

Beyond Memorization & Adapting to Speed: Just as grandmasters don't simply memorize every possible chess position but rather generalize from patterns and experience, the enhanced LLMs move beyond rote memorization. By focusing on high-entropy tokens, the LLMs learn to generalize better, applying their refined decision-making skills to new, unseen problems. This contrasts with traditional Supervised Fine-Tuning (SFT), which can lead to memorization by reducing the entropy of these critical "forking" tokens. This echoes how classical players (System 2) transition to faster games by leveraging their deeply ingrained understanding and relying more on their well-trained intuition (System 1), rather than trying to calculate every move.

In essence, this groundbreaking AI research suggests that the path to truly intelligent LLMs lies not in meticulously refining every single step, but in empowering them to become strategic thinkers, capable of making the most impactful decisions when the "board" is most complex and the "game" hangs in the balance. Just like a chess grandmaster, these enhanced LLMs are learning to play the game of reasoning with a deeper, more strategic understanding, mirroring the human ability to effectively switch between thinking fast and thinking slow as the situation demands.

Limitations and Future Horizons

While the "High-Entropy Minority Tokens" research presents a powerful paradigm shift in LLM training, it's important to acknowledge potential limitations and exciting avenues for future exploration.

One question that arises is whether an exclusive focus on high-entropy tokens might inadvertently neglect subtle but important nuances in low-entropy sequences, or if there's an optimal balance. Future research could explore adaptive masking strategies, where the degree of focus on high-entropy tokens is dynamically adjusted based on the complexity of the task or the model's current performance.

Additionally, applying this high-entropy focus to other domains beyond reasoning, such as creative content generation or complex planning tasks, could unlock further breakthroughs in AI capabilities. Whatever said and done, this research marks a significant step, paving the way for LLMs that not only understand language but truly master the art of strategic thought – and perhaps, one day, even the art of thinking fast and slow.